Video I/O Part 1: An Introduction to Video Compression

Written on Thursday April 05, 2018

Video compression is polarising topic and a key technology for us at Loopbio. In a series of blog posts, beginning with this one, we put our didatic engineers hat on and share our experiences on how to best leverage current compression technologies, with a focus on practical benchmarking of video storage solutions, ultimately seeking to find satisfactory trade offs between several parameters: video quality, disk space and speed.

- Part 1 (this one): an introduction to video compression for novices

- Part 2: fast jpeg decoding

Our company mission is to deliver best in class, easy to use, video analysis solutions. For that we have created loopy, a platform for working with arbitrarily large collections of videos. It lets the users, from the comfort of their browsers, explore, annotate and organize their videos. loopy also offers state of the art analysis tools, from 3D vision to deep-learning powered tracking.

To achieve our mission, loopy needs to be user friendly when used interactively. It must also cleverly use possibly scarce hardware resources, specially when running computationally intensive tasks. Our infrastructure needs are also complicated by the fact that loopy is offered in both an online subscription-based version, and an on-premise managed server version.

Therefore, delays bringing video frames both to the browser and to the analysis programs must be minimized. In particular, as we will see, we should be able to accurately read from arbitrary video positions in as little time and with as little computation as possible, and with absolute accuracy.

A concise primer on video compression

Let us first start with a reading recommendation. This introduction to digital video and references therein, which you can read from the comfort of a gym machine - if you are into these kind of things - is not to be missed. It contains an amazingly visual description of the basics of video coding and related topics, from the human visual system to codec-wars, touching on many of the topics we will speak about in the next few paragraphs.

Basic terminology

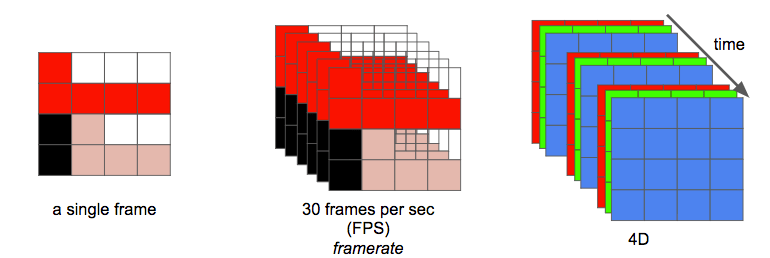

A video is just a sequence of images (frames), happening at a regular (generally but not required) frequency in time (framerate), usually measured in frames per second (FPS). When stored digitally, these images are usually composed of a number of pixels, "dots with a color", organized in a grid. The video has therefore a resolution that indicates the shape of this grid, and which is usually indicated with width (number of pixels per row) and height (number of pixels per column).

How colors are actually represented and their spectrum is given by the so called color space. Each individual color is represented by a number/(s) indicating the intensity of certain color components. Videos have therefore also a color depth, that is measured in bits and indicates how much information is needed to represent the color of a single pixel. Usual color depths are 8 (for grayscale images), 24 (for "true color" images) and 48 (for "deep color" images).

Compression

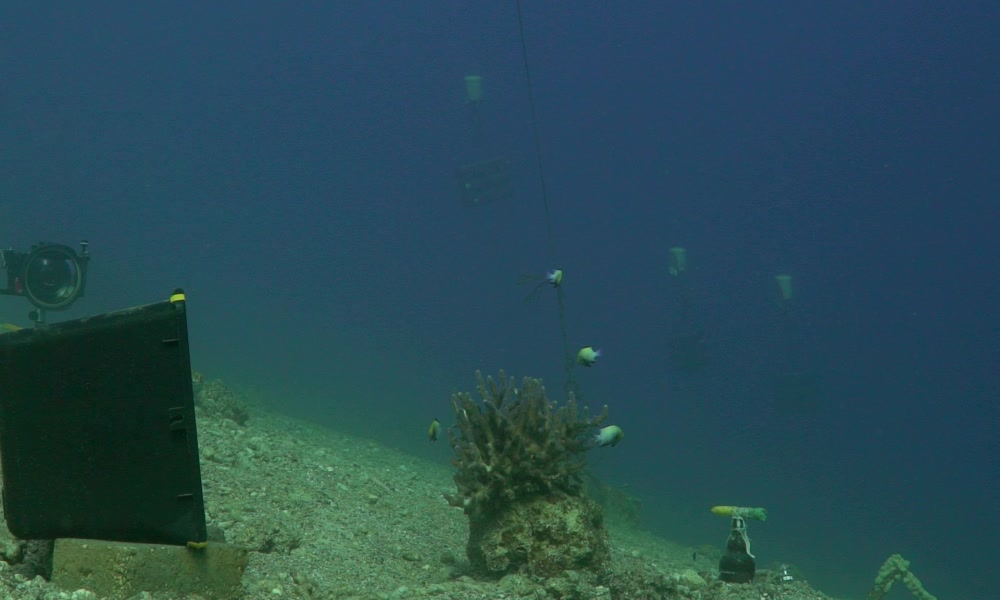

When stored digitally, an uncompressed video needs `width * height * colordepth * framerate * duration` bits. How much is that? As an example, one early video that a client uploaded to loopy had a resolution of 1920x1080, 24 bits color depth and a fast framerate of 120fps. If uncompressed, this video would need 5 971 968 000 bits per second (this is know as bitrate). In other words, a minute of such video would use up around 42GB, or in other words, had we stored these videos raw, we could only have been able to keep around 100 minutes. Our client has around 145 hours of beautiful fish schools footage recorded under the Red See, so there is no way that would work.

Obviously no one uses raw video when storing or transmitting digital video. Our client had around 145 hours of footage, but it was taking only slightly less than 7TB (instead of 2835TB!). This is so because the videos were compressed.

Video compression takes advantages of spatial redundancies (regions of a single frame with a lot or repetition), temporal redundancies (consecutive frames tend to look very much alike) and the human visual perception particularities (for example, we can distinguish better between bright and dark than between shades of colors) to make storing and transmitting videos leaner, while still keeping good video quality.

The two previous images (by courtesy of Simon Gingins) are two consecutive frames from a video of the mentioned collection. On each image the background is mostly blue (spatial redundancy) and the difference between them is minimal (if you can tell, fishes have moved slightly).

The programs that compress and uncompress videos are called video codecs (a portmanteau of coder-decoder). While the techniques used on each of them are highly related, there are many different codecs with different characteristics. Different codecs may generate larger or smaller videos, can compress and decompress at different speeds and/or using different amount of computer resources, can allow better or worse random access patterns, and can produce higher or lower quality videos. In this series of blog posts, we will be interested in finding out which video codecs are competitive, across many parameters, to fill different roles inside our platform.

Compression quality

A very important distinction between codecs is the quality of the output video, that is, how faithfully the compressed video represents the original material. Codecs can trade size for quality. When aiming at reduced size, codecs can use tricks that result in video artifacts. We probably are all used to these kinds of obvious errors when, for example, viewing streaming movies or looking at photos. While watching netflix, did you ever realized how pixelated that super-red cape from SuperWoman looked like?

.png)

An important dividing line here is between lossless codecs, codecs that ensure no loss of quality, and lossy codecs, codecs that do not make such guarantee. Usually lossy codecs can be made to produce good quality video (almost perceptually lossless) at a much higher space savings than lossless codecs by exploiting the redundancies explained above.

Note: for this post and in general for this series, lossless codecs are assumed to be operating under their native colorspace, we are thus ignoring any numerical effects from colorspace conversion.

Lossless codecs receive much less mainstream attention than lossy ones, despite them serving many important roles (in loopy and elsewhere). For example, they are usually tasked with compressing video for archival or editing purposes. We will in this series make lossless codecs an integral part of our benchmarks, assessing how well they fare when compressing interesting parts of larger video collections.

In particular, we will investigate their suitability when storing short annotated regions of videos (clips) and frames (crops) for the purposes of batching/training a neural network for object detection (discussed in a future post). In this workload, loopy needs fast-access caches to concrete frames (in particular, frames that are annotated) in order to feed them to our machine learning algorithms.

Types of frames and video seeking

A final video-codec concept we wish to introduce is that of frame type. As we have said, video codecs can exploit intra-frame redundancy, that is, pixel redundancy within the same image, and inter-frame redundancy, that is, smooth changes between consecutive frames. Naively simplified, inter-frame compression uses the difference between frames to encode the video.

Videos compressed this way can contain up to three types of frames. Intra frames (I) are self contained and can be decoded without refering to any other frame. On the other hand, Predictive (P) frames require previous frames to be decoded first, while Bi-directional predictive (B) frames require both previous and posterior frames.

The main use for video is sequential playback. Modern video codecs can employ quite complex combinations of I, P and B frames, and sort the data for frames in an arbitrary order. Usually I frames are much less frequent, because they consume more space. This is one of the reasons why seeking, that is, reading an arbitrary frame from a video, is a slow operation. When requesting an arbitrary frame, it is likely we will need to decode many other frames to get the result.

To the best of our knowledge, there are no codecs that are specifically designed for fast seeking (that is, fast random frame retrieval). Seeking is a important operation for many tasks that loopy needs to do routinely, so when too slow, it might affect negatively the user experience. loopy often needs to display arbitrary segments of a video, often accessed in a pseudo-random order (for deep learning or by the user), with the video sometimes on slow underlying storage.

That is why we will also pay attention to seeking performance of video codecs, which is not a topic usually covered by video benchmarks.

Video collections at loopbio

Our company currently focus on servicing the life-sciences and, in particular, the behavioral research community. Because of that, we get to analyze some particular kinds of videos. The most common video collection we help analyzing contains hours of high-resolution, fast-framerate animal behavior recordings. Usually these come from either still cameras - both in natural environments and behavioral arenas, over and underwater - or aerial (drone) cameras. A recurrent characteristic of these videos is a fairly constant background that, in many cases, is also very simple.

We have expended a few fun minutes browsing youtube for publicly available examples of these kind of videos, and have selected just a few:

We will be using these videos to illustrate a few concepts about video compression and to benchmark video compression alternatives in later posts. To finish on an inviting note, we will now show a couple of results from our benchmarks on lossless codecs. We will provide codec descriptions and describe in detail our benchmarking methodology in later installments of this series.

Codec Selection Matters: Video Encoding Benchmark

How fast and how much do lossless codecs compress our benchmarking video suite? We asked a few lossless codecs to re-compress the original videos at different resolutions and we measured both quantities - disk savings and speed. We summarize some of our findings in the following plot (click on the plot legend to show/hide series):

A few codecs contend. ffv1, as we used it, is an intra-frame only codec geared towards a good trade off between compression speed and space savings. huffyuv/ffvhuff is a simple intra-frame only codec geared towards fast decompression. We used ffmpeg to encode for both ffv1 and ffvhuff. H.264 (encoded using ffmpeg bindings to libx264) is a fairly sophisticated codec, with many nuts and bolts that can be tweaked as needed; here we use its lossless mode. Each codec is parametrized in different ways (see and click the legend).

We also include a lossy baseline - and it is quite a strong baseline - which will serve as reference for speed during our benchmarks. We call it "exploded-jpg" and it essentially works by storing the video by saving each frame individually to a good quality jpeg-compressed file - so it is not a video-codec per se, although there are codecs (mjpeg) that work in an analogous way.

Note the wide range of performances. In the vertical axis we plot the space saved by compressing the video as a percentage of what would have taken to store it uncompressed. Higher is better and so H.264 is the winner. In the horizontal axis we plot the speed-up relative to the baseline. Again, the higher the better. So H.264, properly parametrized, is a win win here. For an introduction to the operation of H.264, see this article.

Why do we have such broad error bars? A statistician would probably start thinking about small data effects. While some of it might be true, here it does not really tell the whole story, or even the most relevant part of the story. Instead, this plot is a summary of writing workloads for different video types at different resolutions. Codecs can behave quite differently depending on those conditions. To us, these large error bars send this message: while having good defaults informed by relevant data is important, sometimes it can repay to engineer for the very particulars of an application. Obviously this is easier if one counts with trustworthy benchmarking tools

An important note about the baseline speed. It is generally much slower (2x to 4x) than the video codecs. Truth to be told, we did not parallelize it. That is, while the video codecs were free to use as many resources from our system as they wanted to - and all of them made the computer sweat a lot - we only allowed the baseline to use 1 sad core. Note that, usually, parallelism needs to be taken into account explicitly in a benchmark. When in the gym, I could only read video compression tutorials when taking it easy over the static bike. If I would work more intensely, then I would have lacked the resources to also do anything else at the same time.

What we did use is for the baseline is a highly optimized JPEG codec. This is too important to be overlooked, as we will discuss soon in this series.

While writing speed is very important for video acquisition systems like our own Motif, for loopy writing speed only has a small relative importance. For this reason, from now on we will focus our efforts on thorough benchmarking "write-once, read-many times" workloads.

How slow is seeking with lossless codecs?

On our second and concluding for now result, we plotted relative speed of retrieving a single random frame from a video compressed with the codecs used above against the baseline.

Video codecs are, in the best case, an order of magnitude slower when serving arbitrary video frames. This result holds no matter the underlying storage system (let it be a SSD, a spin disk or a network file system). That would immediately disqualify them for many machine learning workloads, were a program learns to perform some tasks by being exposed to examples of such task in random order. Random data reading must be as much as possible out of the way in a workload that contains many other heavy computation components. Therefore, the difference between a high and a low speed solution is the difference between speedy result delivery and resource infra-utilization leading to delays that can be counted in days or weeks.

Videos encoded as JPEG images, our baseline, is in fact the most common way in which video training data is stored. We are exploring if there is a way to use more efficient access patterns so that video codecs can somehow become an option in this arena, bringing some further benefits, like higher image quality, better introspectability and web playability, with them.

See you soon

If you are interested in our comprehensive video reading benchmarks follow us on twitter in @loopbiogmbh.