Video I/O Part 2: Fast JPEG Decoding

Written on Tuesday April 17, 2018

In the previous installment of our series on Video I/O we threatened thorough benchmarks of video codecs. This series of blog posts is about ways to minimize delays in bringing video frames both to the browser and to video analysis programs, including training deep learning models from video data. In that post we showed plots like this one:

We used and will keep using what we called "exploded jpeg" as a baseline when talking about video compression, as encoding images as jpeg is, by far, the most commonly way to transport image data around in deep learning workloads. Because encoding and, more specially, decoding are important core operations for us in loopy, and also because we want to give ourselves a hard time trying to beat baselines, we strive to use the best possible software for encoding and decoding jpeg data.

So what is the fastest way to read and write jpeg images these days? And how can we get to use it in the most effective way? In this post we demonstrate that using libjpeg-turbo is the way to go, presenting the first independent benchmark (to our knowledge) of the newest jpeg turbo version and touching on a few related issues, from python bindings to libjpeg-turbo to accelerated python and libjpeg-turbo conda packages. So let's get started, shall we.

The Contenders

We are going to look across four dimensions here: libjpeg vs libjpeg-turbo, current stable version of libjpeg-turbo (1.5.3) vs the upcoming version (2.0), using libjpeg-turbo with different python wrappers, and using libjpeg-turbo with different parameters controlling the tradeoff between decoding speed and accuracy. On each round there will be a winner that gets to compete in the next one.

There is one main open source library used for jpeg encoding: libjpeg. And there is one main alternative to libjpeg for performance critical applications: libjpeg-turbo. Turbo is a fork of libjpeg where a lot of amazing optimization work has been done to accelerate it. Turbo works for many different computer architectures, and used to be a "drop-in" replacement for libjpeg. This stopped being true when libjpeg decided to adopt some non-standard techniques - perhaps hoping for them to become one day part of the jpeg standard. Turbo decided not to follow that path. In principle this means that there might be some non-standard jpeg images that turbo won't be able to decode[1]_. However, given the prevalence of turbo in mainstream software (for example, it is used in web browsers like firefox and chrome, and is a first class citizen in most linux distributions), it is unlikely these incompatibilities will be seen in the wild.

Having decided that libjpeg-turbo is to be used, we turn our attention to the python wrapper used on top of it. Our codebase has a strong pythonic aroma and therefore we are most interested on reading and writing jpegs from python code. Therefore we are using libjpeg, which is a C library, wrapped in python. We look here at two main wrappers: opencv, which we use as the go-to library for reading images, and a simple ctypes wrapper (modified from pyturbojpeg).

The simple ctypes wrapper exposes more libjpeg specific functionality from the wrapped C library such as faster but less accurate decoding modes. Usually these modes are deactivated by default, since they result in "less pleasant" images (for humans) in some circumstances. However certain algorithms might not notice these differences - for example tensorflow activates some of them by default under the (likely unchecked) premise that it won't matter for model performance.

The Benchmark

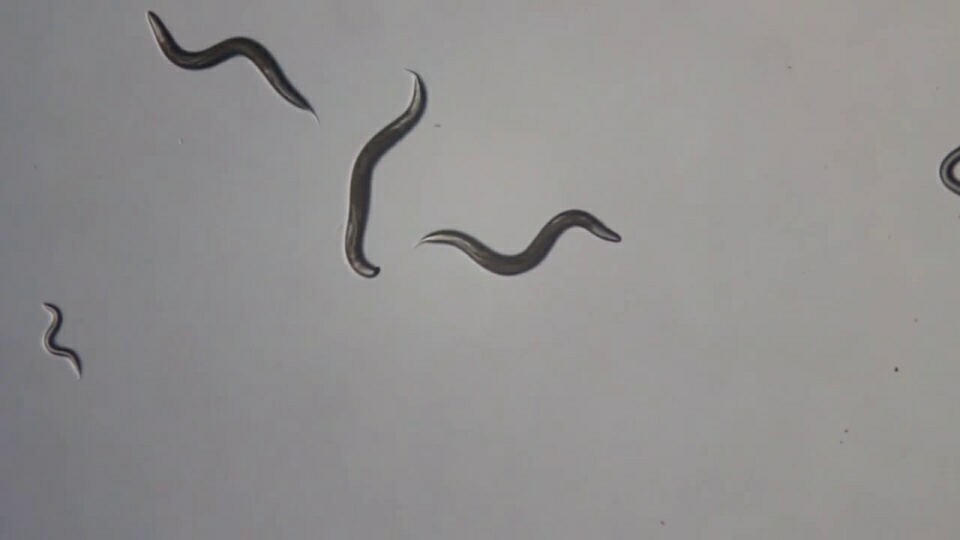

To measure how fast different versions of the libjpeg library can compress and decompres, we have used 23 different images from a public image compression benchmark dataset, some of our clients videos and even pictures of ourselves. We used these images at three different sizes, corresponding (without modifying the aspect ratio) to 480x270 (small), 960x540 (medium) and 1920x1080 (large) resolutions. We always used YUV420 as encoded color space and BGR as pixel format.

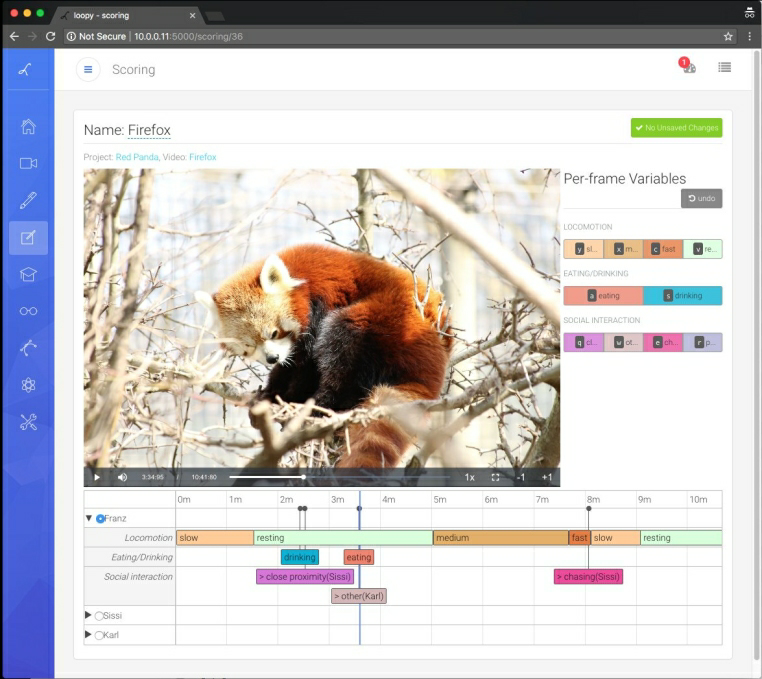

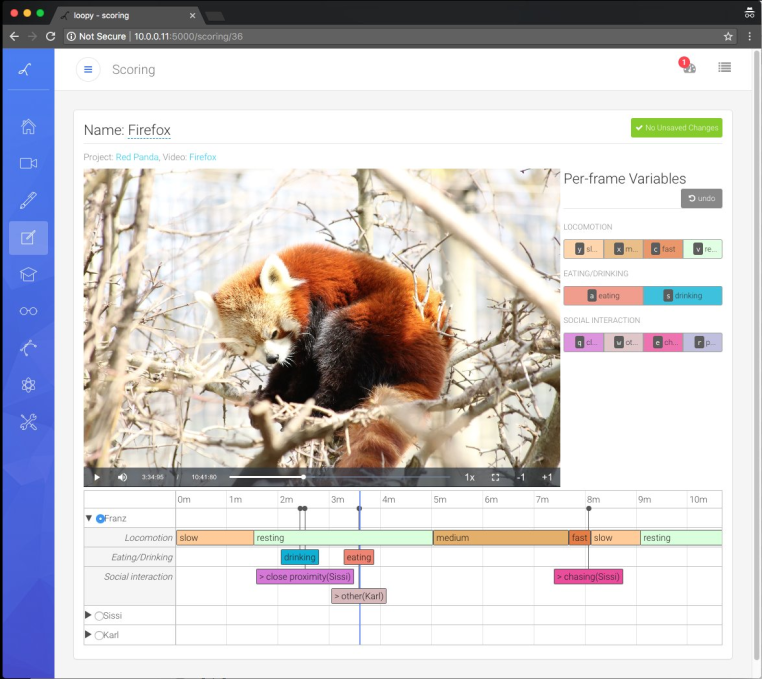

The following are three images from our benchmark dataset, at "medium" size, as originally presented to the codecs and after compression + decompression (with jpeg encoding quality set at 95 and using the fastest and less precise libjpeg-turbo decoding settings). Can you tell which one is the original and which one is the round-tripped version? (note, we have shuffled these a bit to make the challenge more interesting).

All data in this post is summarized results across all images, but it is important to note that when dealing with compression, results might vary substantially depending on the kind of images to be stored. In specific cases, such as when all images are similar, which might happen when storing video data as jpegs, it might be useful to select encoding/decoding parameters taylored to the data.

For each image and codec configuration we measured multiple times the round-trip encoding-decoding speed with randomized measurement order. We have checked that each roundtrip provides acceptable quality results using perceptual image comparison between the original image and the roundtripped one.

We have timed speed when using libjpeg and libjpeg turbo via python wrappers and subsequently the measurements always include some python specific costs - such as the time taken to allocate memory to hold the results. It is expected some speedups can be achieved by optimizing these wrappers memory usage strategies. We only measure speeds for image data already in RAM and that is expected to be "cache-warm", so these microbenchmarks represent a somehow idealized situation and should better be complemented with I/O and proper workload context. All measurements were made on a single core of an otherwise idle machine, sporting an intel i7-6850K and fairly slow RAM.

The Results

Encoding Speed

The following table shows average space savings for the benchmarked encode qualities. These are identical for all the libjpeg variants used and are compared against the space taken by the uncompressed image.

| Encode Quality | Average Space Savings |

|---|---|

| 80 | 94.1 ± 2.8 |

| 95 | 87.2 ± 5.4 |

| 99 | 77.6 ± 8.4 |

Before showing our results summary, let us enumerate again the contenders:

- opencv_without_turbo: opencv wrapping libjpeg 9b

- opencv_with_turbo: opencv wrapping libjpeg-turbo stable (1.5.3)

- turbo_stable: ctypes wrapper over libjpeg-turbo stable (1.5.3)

- turbo_beta: ctypes wrapper over libjpeg-turbo 2.0 beta1 (1.5.90)

- turbo_beta_fast_dct: like turbo_beta, activating "fast DCT" decoding for all passes

- turbo_beta_fast_upsample: like turbo_beta, activating "fast upsampling" decoding

- turbo_beta_fast_fast: like turbo_beta, activating both "fast DCT" and "fast upsampling"

The following plot shows how encoding speed varies across different compression qualities (you can show and hide contenders by clicking in the legend). We can see how libjpeg-turbo is a clear winner. opencv_without_turbo is doing the same job as its turbo counterpart opencv_with_turbo, just between 3 and 7 times slower. There is a second relatively large gap between using opencv or using directly turbo via ctypes, indicating that for high performance applications it would be worth to use more specific APIs. Finally, the upcoming version of libjpeg-turbo also brings a small performance bump worth pursuing.

Decoding Speed

The following plot shows decoding speed differences between our contenders, as a function of the image quality.

Again, turbo is just much faster than vanilla libjpeg, using the ctypes wrapper is much faster than using opencv, and using the newer version of turbo is worth it.

Three new candidates appear slightly on top of the speed ranking: turbo_beta_fast_dct, turbo_beta_fast_upsample and turbo_beta_fast_fast. These activate options that trade higher speed for less accurate (or less visually pleasant) approximations to decompression. They are deactivated by default in libjpeg-turbo, but other wrapper libraries, notably tensorflow, do activate them by default under the premise that machine learning should not be affected by the loss of accuracy. The same that our tests did not find any relevant difference on speed, they did not show any elevated loss on visual perception scores, so we do not have any strong opinion on activating them or not, we just think it is now mostly irrelevant.

Why is the ctypes wrapper faster than opencv? There probably are several reasons, but if one looks briefly to the opencv implementation, a clear suspect arises. With libjpeg-turbo you can specify wich pixel format the jpeg data is using (the jpeg standard is actually agnostic of which order do the color channels appear in the file) to avoid unneeded color space conversions. OpenCV instead goes a long way to non-optionally convert between RGB and BGR, probably to ensure that jpeg data is always RGB (which is a more common use) while uncompressed data is always BGR (a contract for opencv). Add to this that opencv barely expose some of the features of libjpeg and it does not have libjpeg-turbo specific bindings, our advice here would be to use a more specific wrapper to libjpeg-turbo.

Talking about wrappers, let's look at the last plot (for today). Here we show decoding speeds as a function of image size.

The larger the image, the faster we decompress. This is normal: there is some work that needs to be done before and after each decompression. The take home message here is, to our mind, to improve the wrappers to minimize constant performance overheads they might introduce. An obvious improvement is to use an already allocated (pinned if planning to use in GPUs) memory pool. This should prove beneficial, for example, when feeding minibatches to deep learning algorithms. A more creative improvement would be to stack several images together and compress them into the same jpeg buffer.

Note also that there are several features of turbo we have not explored here. An important example is support for partial decoding (decode a region (crop) of an image without doing all the work to decode the whole image), which was introduced in turbo recently (partially by google) and was then exposed in tensorflow. We have not actually found ourselves in need for these advanced features, but let the need come, we are happy to know we have our backs covered by a skilled community of people seeking our same goals: to get image compression and decompression times out of our relevant bottlenecks equations.

Speeding Up Your Code

TLDR; use our opencv and libjpeg-turbo conda packages

So how do one use libjpeg-turbo?. Well, as we mentioned, libjpeg-turbo is everywhere these days - so some software you run is probably already using it. If you are using Firefox or Chrome to read this post, it is very likely that jpeg images are being decompressed using turbo. If you use tensorflow to read your images, you are using turbo. On many linux distributions libjpeg-turbo is either the default package or can be installed to replace the vanilla libjpeg package. We are not very knowledgeable of what is the story with other platforms, but we suspect that libjpeg-turbo reach and importance extends to practically any platform where jpeg needs to be processed.

What if you use the conda package manager? In this case you might be a bit out of luck, because the two main package repositories (defaults and conda-forge) have moved to exclusively use libjpeg 9b in their stack. If you try to use a libjpeg-turbo package in a modern conda environment, chances are that you will bump into severe (segfaulty) problems. This is a bit of a disappointment given that conda is commonly used in the scientific, data analysis and machine learning fields these days.

But good news! if you are on linux your luck has changed - all you need to do is to use our opencv and libjpeg-turbo packages (which bring along our ffmpeg package). Because we use these packages in loopy we keep them in sync with the main conda channels and ready to be used by any conda user.

These packages avoid problems with parallel installations of libjpeg 9 and libjpeg-turbo, and offer other few goodies (like the ability to choose between GPL and non-GPL versions of ffmpeg or patches to control video decoding threading when done via opencv). The creation of these modified packages was not a small feature and will also be covered in a future blog post. In the meantime, you can just use any of these command lines to use the packages:

# before running this, you need conda-forge in your channels conda config --add channels conda-forge # this would get you our latest packages conda install -c loopbio libjpeg-turbo opencv # this would get you and pin our current packages (N.B. requires conda 4.4+) conda install 'loopbio::opencv=3.4.1=*_2' 'loopbio::libjpeg-turbo=1.5.90=noclob_prefixed_gcc48_0'

Or add something like this to your environment specifications (note these are the exact software versions we used when benchmarking for this post):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | name: jpegs-benchmark

channels:

- loopbio

- conda-forge

- defaults

dependencies:

# uncomment any of these to get the opencv / turbo combo you want

# note that, at the moment of writing, these pins are not always respected

# see: https://github.com/conda/conda/issues/6385

# opencv compiled against turbo 2.0beta1

- loopbio::opencv=3.4.1=*_2 # compiled against turbo 2.0beta1

# opencv compiled against turbo 1.5.3

# - loopbio::opencv=3.4.1=*_1

# opencv compiled against libjpeg 9

# - conda-forge::opencv=3.4.1

# libjpeg-turbo 2.0beta1

- loopbio::libjpeg-turbo=1.5.90=noclob_prefixed_gcc48_0

# libjpeg-turbo 1.5.3

# - loopbio::libjpeg-turbo=1.5.3

|

Finally, you can use these packages with our pyturbojpeg fork to achieve better performance than generic libjpeg wrappers like PIL or opencv. If you install both the turbo packages and our wrapper, you can easily compress and decompress jpeg data like this:

1 2 3 | from turbojpeg import Turbojpeg

turbo = Turbojpeg()

roundtrip = turbo.decode(turbo.encode(image))

|

Conclusions

If you need very fast jpeg encoding and decoding:

- Use turbo

- Use turbo with fast wrappers

- Use the latest version of turbo and decide for yourself if using faster modes for encoding and decoding is worth it.

- If you use conda then use our accelerated jpeg and opencv packages

We also think that any benchmark for, let's say, image minibatching for deep learning, should explicitly include a solution based on libjpeg-turbo as a contender.

Finally, use turbo also if you do not need any of these things as it is very easy to install (on linux) and will probably magically speed up many other things on your computer.

It is good to remember that open source software always needs a hand.

| [1] | From the developers libjpeg-turbo is currently under consideration for becoming an official ISO/ITU-T reference implementation. Furthermore the libjpeg 'SmartScale' extension has not been adopted and the likelihood of it being used even if it was - is low. |